Co-authors: Jasmine QIAN, Sam LIU and Vivian GU

LiveRamp Engineering has a world-class infrastructure organization that supports the company’s developers who deal with big data on a daily basis. The Release Quality Engineering (QE) team has standardized assurance via automation testing for production monitoring dashboards to inspect, identify, and eliminate defects at the earliest time possible to reduce customer impact.

The challenge

In LiveRamp’s Analytics Environment Project, once a tenant was created by the tenant factory script, we used to verify the tenant’s permission manually. There were hundreds of tenants created in multiple regions last years, followed by On-Calls (OCs) caused by the tenant permission error across multiple third-party platforms such as Citrix, Tableau, Jupyter, and GCP. It impacted our customer experience and became a priority to solve.

LiveRamp’s New Feature needs

- Intelligent: The platform needs to have the ability to identify when to trigger the new round and which options to verify, and retrigger to run the automation test after the issue was fixed

- Stable: The platform needs to cover all the unexpected scenarios

- Extensible: The platform needs to support all project regions – AU/JP/US/EU

- Manageable: Prevent alert fatigue and provide actionable alerts

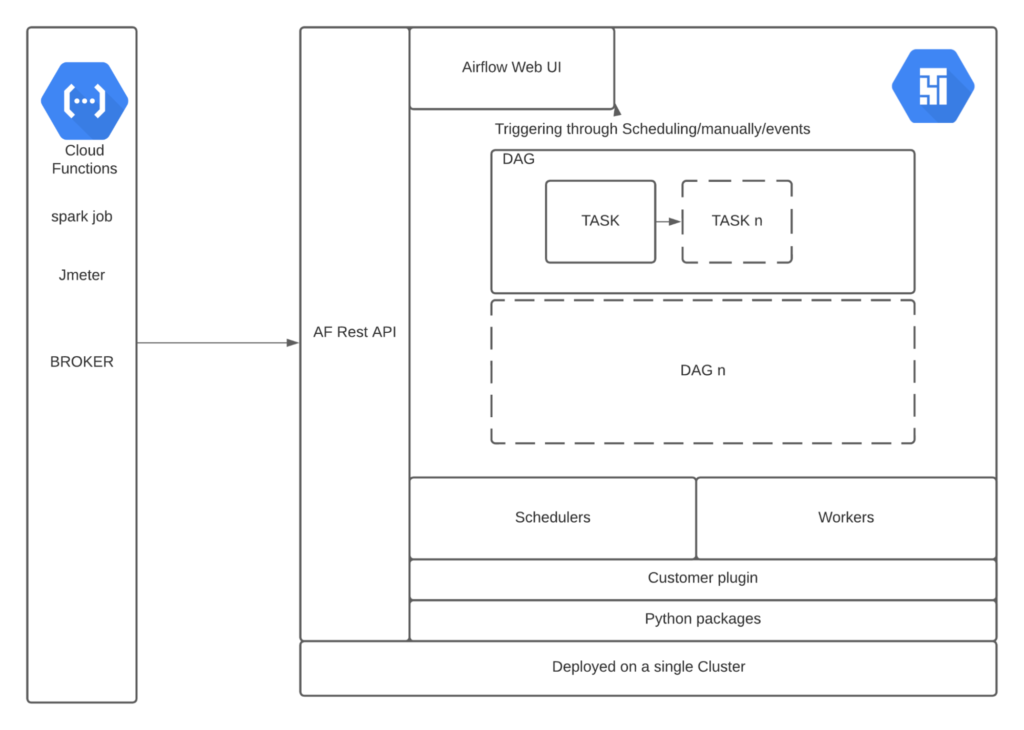

LiveRamp engineering’s approach: Apache Airflow

Apache Airflow is a platform created by the technical community to programmatically author, schedule, and monitor workflows. The data team usually uses Airflow to migrate, clean and sync data.

Why Airflow?

Airflow completely met LiveRamp Engineer’s needs. We wanted a stable microservice that would support testing. Therefore, Airflow became a suitable option. It is the first time our release QE team leveraged it for automation testing.

We selected Airflow because it has some advantages. One example is its ability to be triggered by an outside broker through restful API and Airflow that could use DAG to complete a series of tasks similar to the QE test flow. Airflow can also quickly reschedule the time for any DAG or do a manual DAG kick-off.

Solution

We used Airflow in our test project called Tenant Verification.

We have a total of 11 settings needed for one tenant creation. Some are default, but others are optional. We have APIs, GCP resources and some settings set by Windows servers. How do you verify all of these settings? This verification is different from UI or API automation testing.

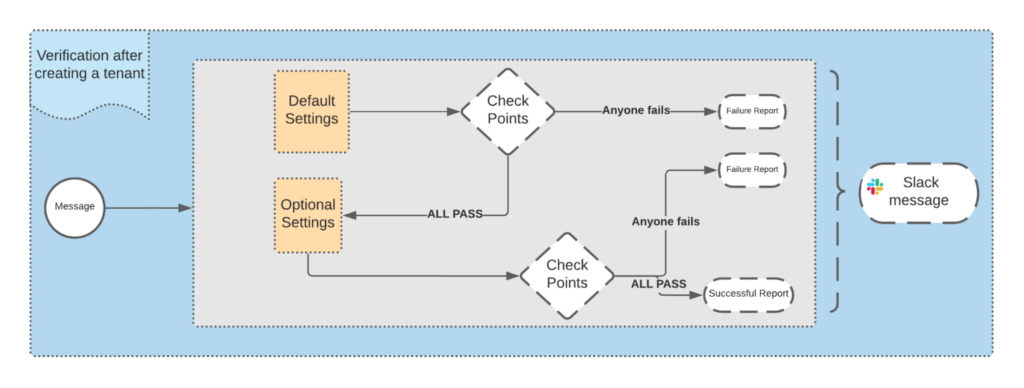

Let’s look at the verification Logic Flow.

After one tenant was created, the platform would subscribe to the message to Tenant Management. After that, it triggered the task flow by schedule.

For each verification task flow, it checked for default settings first. If any default check failed, it would interrupt this round and send a failure report to our Slack channel.

Only when all of the default settings passed verification does it proceed to the next step to check for the optional settings.

Similarly, if any optional check fails, it sends a failure notification. However, if all succeed, we receive a successful report.

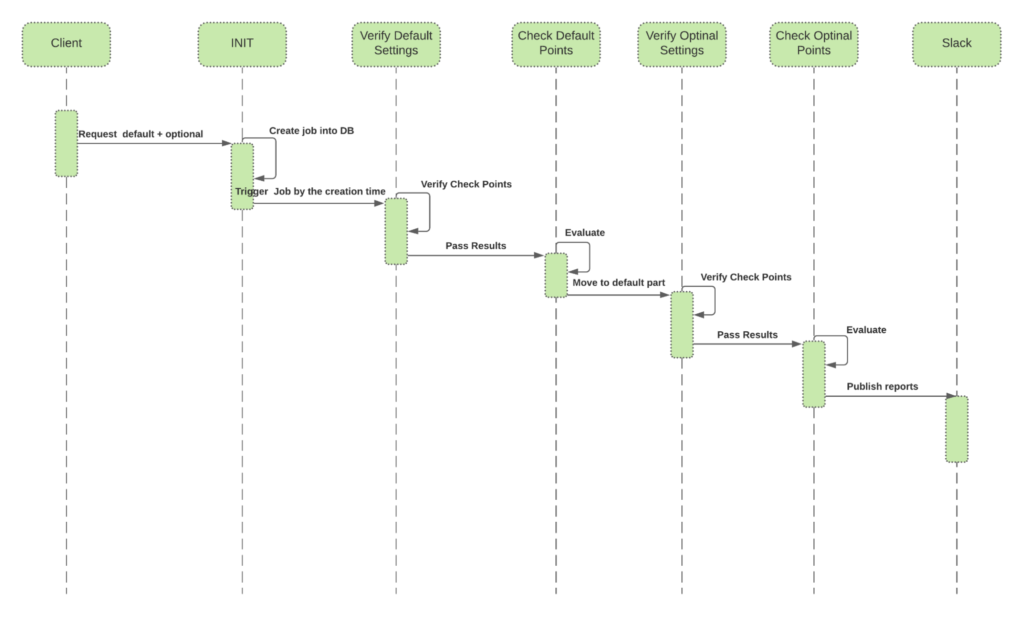

Sequence Diagram

Here is the Verification Sequence Diagram. It shows the designed tasks and cross communications between tasks.

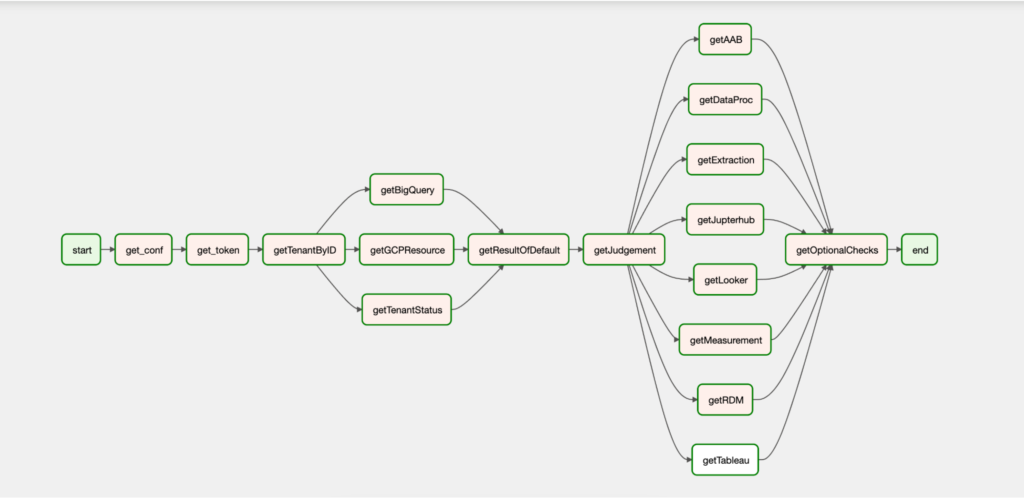

Graph View

Here is a View of the DAG. It begins on the left marked “start” and then moves toward the right.

How we accomplished the tenant verification

There were two verification options:

- Airflow automatically runs the verification.

- The user triggers Airflow API DAG run manually with specific parameters.

Result notification

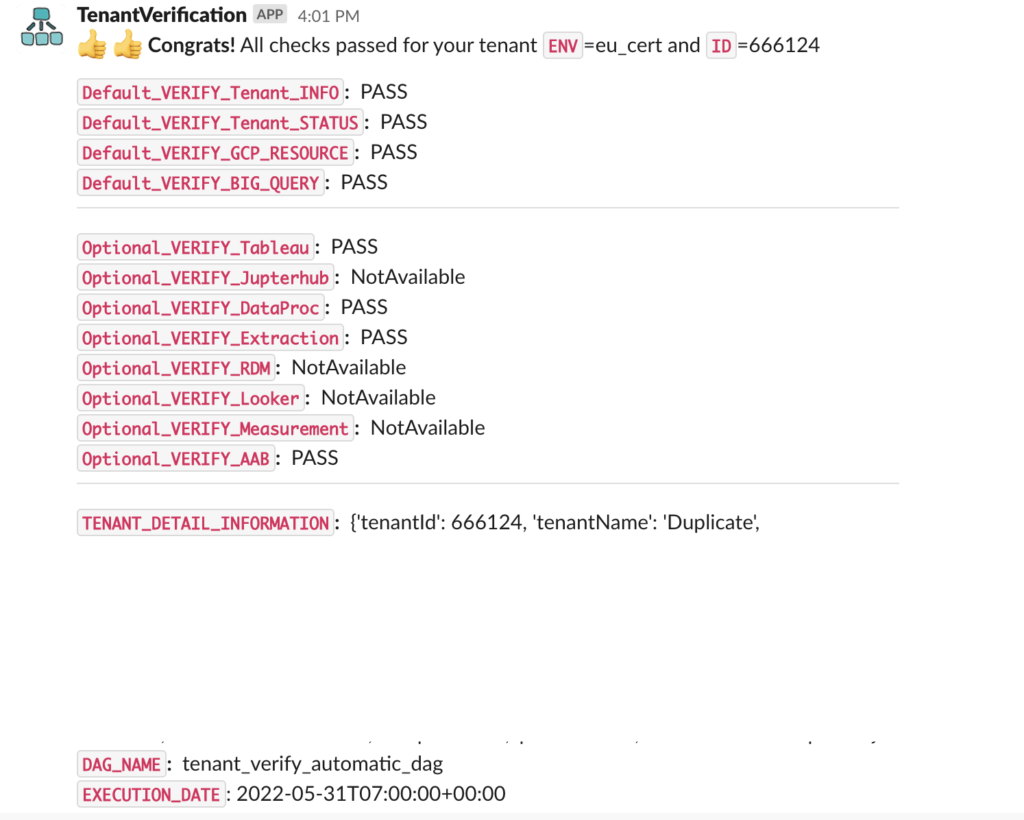

Successful message:

This outcome showed the ENV that was running at the time. We quickly found the details of each run. The most important effect was that all the checkpoints succeeded as expected.

A success status message was automatically sent out in the specific Slack channel if all checkpoints passed. This feature instilled confidence that the tenant was created accurately.

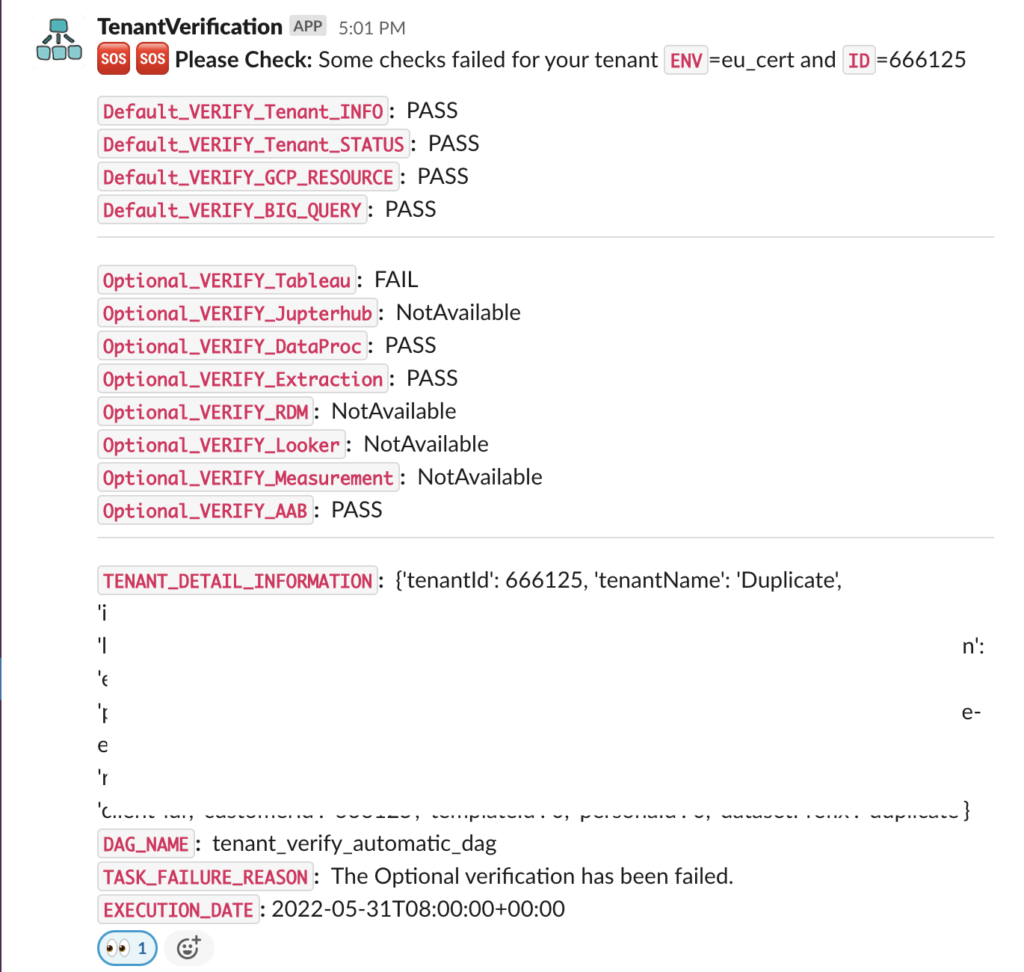

The failure message (similar to the following screenshot) would be sent to the Slack channel if there is an error. Then, we could quickly become aware which one failed.

Summary

With the Tenant Verification’s help, we received the newly created tenant request once the tenants were submitted. Airflow triggered an automatic process after an hour or so to check the set-up. If any setup fails, we send the alerts out and escalate to the DEV team to look into it, allowing us to solve issues much quicker creating a more positive customer experience.

We hope this helps others in their infrastructure journey.

LiveRamp is a data enablement platform designed by engineers, powered by big data, centered on privacy innovation, and integrated everywhere.

Enabling an open web for everyone.

LiveRamp is hiring! Subscribe to our blog to get the latest LiveRamp Engineering news!